- stanford Handout

- Artificial Intelligence: A Modern Approach(R&N) chap 15

MARKOV ASSUMPTION

Limited horizon assumption: not depend on whole history

\[P(z_t|z_{t-1} z_{t-2} …z_1) = P(z_t|z_{t-1})\]

Stationary process assumption: transition is not depend on time

\[P(z_t|z_{t-1}) = P(z_2|z_1)\quad t\in 2…T\]

Transition Matrix

The model need to maximize the log-likehood of the state sequence for transition matrix.\( \vec{z}\) is the state sequence and $A$ is transition matrix. \[\log P(\vec{z};A)\] By the Lagrangian Multiplier, the optimal solution is given by \[A_{ij}=\dfrac{\sum_{t=1}^T1\{z_{t-1}=s_i\wedge z_t=s_j\}}{\sum_{t=1}^T1\{z_{t-1}=s_i\}}\]

HMM INTUITION

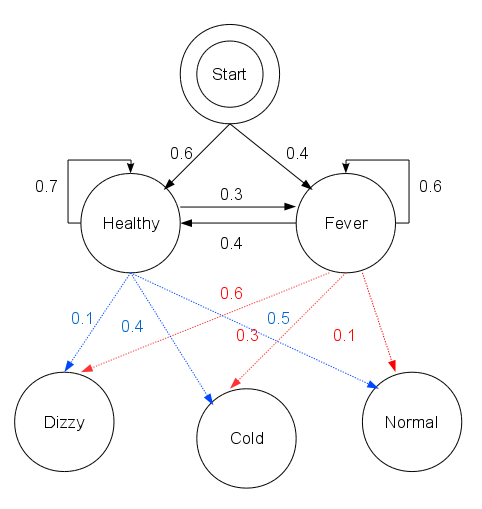

Both wiki and R&N have a good example that illustrate the intuition of HMM. Basically, we have known observation sequence and unknown state sequence. As an example in wiki, the known observation sequence is the patient feeling(normal, cold, dizzy) and the unknown state sequence is the health condition of the patient(Healthy, Fever). Below is the image in wiki Viterbi algorithm.

By the probability of state transition(P(Healthy->Fever)) and emission matrix of state to observation(P(Normal\|Healthy)), we are able to approximate the state sequence or predict the next state or maybe next observation based on the specific tasks.